If you’ve noticed that AI is everywhere now, in your DMs, your emails, your search results, your work apps, here’s the plot twist nobody expected:

Behind every “smart” AI model, millions of humans are quietly training it.

Yep. Every time an AI chatbot gives an accurate answer, recognizes an object, responds safely, or translates a sentence correctly… someone somewhere labeled, rated, tagged, or fixed data to make that happen.

Welcome to the world of AI microtasks, one of the fastest-growing gig income opportunities of 2026.

From students earning side income by rating AI responses to linguists working on multilingual rater tasks, to remote freelancers performing annotation jobs for Fortune 500 companies, this is a real, legitimate, rapidly scaling digital work category.

And the best part?

You don’t need coding skills. You don’t need prior tech experience. You just need accuracy, patience, and language ability.

This blog gives you the ultimate deep dive into AI microtask jobs, what they are, how much you can earn, who’s hiring, how to get started, and how freelancers can use Truelancer to land high-quality AI trainer projects in 2026.

Let’s get into it.

The 2026 Explosion: Why AI Microtask Jobs Are Suddenly Everywhere

In 2025–2026, the pace of AI development skyrocketed.

- New language models every quarter

- Voice assistants becoming smarter

- Enterprises deploying chatbots for everything

- AI regulations requiring more human oversight

- Multilingual AI becoming a global priority

All this growth led to a massive bottleneck:

AI can’t train itself. It needs humans, millions of them.

Every large AI company, from OpenAI to Meta to Google to Anthropic to enterprise-level players like Wipro, Innodata, Shaip; is hiring “AI Trainers,” “Data Annotators,” or “Raters.”

A relatable real-world moment:

Think about when an AI is asked to detect sarcasm in a sentence that has zero emojis.

Or think about when an AI is trained to read between the lines.

Or when ChatGPT finally answers your question correctly on the second attempt.

That correction didn’t happen magically. Humans trained the model to behave better.

Microtask workers, globally located, flexible hours, remote, multilingual, are becoming the backbone of this AI revolution.

And the demand is nowhere close to slowing down.

What Exactly Are Microtask AI Jobs?

Microtask AI jobs are tiny, highly specific tasks that assist artificial intelligence systems in learning, improving, and refining their capabilities. These tasks are the building blocks that allow AI models to understand complex human behaviors, recognize patterns, and make intelligent decisions.

Microtask Breakdown

Think of microtasks as the training wheels for AI models. They’re usually simple, well-defined, and fast to complete, typically ranging from a few seconds to minutes per task. Because of their simplicity, they often don’t require much technical expertise, making them accessible to a wide range of people. Workers are compensated either per task or per hour, depending on the platform and project specifications.

Why Are They So Important?

Imagine AI as a toddler trying to make sense of the world. It needs constant guidance and correction to grow smarter. In this analogy, microtask workers are the ones teaching the AI words, helping it correct mistakes, and showing it what’s right and wrong. These seemingly small, repetitive tasks contribute to training AI models in ways that are scalable, flexible, and incredibly effective.

- Teaching AI words: Labeling text and teaching AI the meaning of sentences or phrases.

- Correcting its mistakes: Flagging inappropriate or inaccurate responses to guide AI toward improvement.

- Showing right vs. wrong: Helping AI distinguish correct information from incorrect, inappropriate, or biased content.

- Helping AI understand images: Annotating images or highlighting objects, enabling AI to better “see” and understand visual data.

- Explaining human thought: Assisting AI in mimicking human reasoning and emotional responses by labeling sentiment, intent, and tone.

Real-World Microtasks

To make it clear, let’s break down some real examples of what microtask AI jobs look like in action:

- Tagging emotions in a sentence: Assigning labels like neutral, positive, or negative based on the sentiment of a statement or sentence.

- Highlighting objects in an image: Identifying and marking objects (like cars, people, or animals) within images to help AI “see” and interpret visuals more accurately.

- Rating AI-generated answers: Evaluating the quality, accuracy, and relevance of responses produced by AI models (e.g., does it make sense? Is it factually correct?).

- Categorizing short video clips: Sorting short video clips into categories based on their content. This is particularly useful in training models that understand and generate videos.

- Testing chatbot accuracy: Engaging with chatbots to evaluate whether they respond correctly and contextually, improving the bot’s ability to communicate effectively.

- Checking outputs for bias or harm: Reviewing AI outputs (e.g., text or decisions) to ensure they aren’t biased, discriminatory, or harmful to individuals or communities.

- Recording short voice clips: Contributing to voice datasets by recording sentences or phrases, which can be used to improve AI’s ability to understand and generate speech.

- Ranking AI responses: Comparing two AI-generated answers to a question and selecting the more accurate or relevant response.

📈 Why Microtask Jobs Will Be a Major 2026 Trend

Microtask AI jobs are no longer just a small niche, they are becoming a global movement. Here’s why 2026 will be the year these jobs truly explode in popularity and demand. AI companies are facing three urgent needs, and microtask workers are solving them perfectly:

1. The Explosion of AI Models

AI is expanding at breakneck speed, and it’s not just about a few isolated models anymore. The landscape is evolving to include:

- Domain-specific LLMs (Large Language Models): Custom AI designed to understand particular industries or topics, such as healthcare, finance, or tech.

- Enterprise Chatbots: Businesses are rolling out custom-built AI chatbots for everything from customer support to sales.

- Multilingual Voice Assistants: The need for voice assistants that understand and respond to different languages, dialects, and cultural nuances.

- Safety-first AI Evaluators: AI that not only works but works ethically, evaluating outputs for safety, bias, and fairness.

All of these models require millions of human-reviewed samples to function properly. Whether it’s correcting an AI’s response or ensuring a system accurately recognizes diverse accents, the human touch is essential for training AI. Microtask workers are the perfect solution to scale this process rapidly and efficiently.

2. The Insane Multilingual Demand

In 2026, English-only AI will be a thing of the past. The rise of global AI demands a massive multilingual workforce to keep up with the sheer number of languages needed for AI to function globally. We’re talking about 47+ languages, and growing.

Consider the list of languages companies need AI systems to support:

- Hindi

- Tamil

- Finnish

- Filipino

- Spanish

- Arabic

- Swahili

- Korean

- Polish, and more

These are just the top examples. Regions like India, Latin America, Africa, and Southeast Asia are quickly becoming global annotation hubs, where skilled annotators are needed to ensure AI systems work across cultures and languages.

The multilingual revolution is pushing microtask jobs to the forefront because businesses can’t afford to ignore non-English markets. AI needs to be localised, and microtasks offer the perfect low-barrier entry point to train these AI systems on a massive scale.

3. AI Regulations Are Coming

2025 marked a turning point for AI regulation. Governments around the world are realizing that AI can’t just evolve unchecked; it needs guardrails. New laws and guidelines are being established globally to ensure AI is safe, fair, and ethical. This means human oversight is becoming mandatory. Companies are now required to implement:

- Bias checks: Ensuring that AI doesn’t propagate or perpetuate existing biases in decision-making.

- Toxicity evaluations: Flagging harmful or offensive content generated by AI.

- Cultural relevance reviews: Making sure AI systems are culturally aware and sensitive to different societal norms.

- Human approval loops: Humans must approve certain decisions made by AI, ensuring accountability and transparency.

This regulation wave means companies need human raters who can review AI outputs in real time, making sure AI is ethical and safe. Microtask workers are ideally positioned to fulfill this demand, monitoring and rating AI results in a flexible, scalable way that is perfect for meeting regulatory requirements.

4. Decentralized Community Rater Programs

The world of AI ratings is about to shift in a big way. Companies like OpenAI, Anthropic, and Meta are pioneering open “AI rater communities”, decentralized programs where people from all over the globe can participate in evaluating AI outputs. This is the next big wave in the AI training ecosystem, allowing AI systems to be fine-tuned by a diverse, decentralized workforce.

These community rater programs give anyone, anywhere, the opportunity to contribute to AI’s development, and get paid for it. The global workforce will be crowdsourced, allowing for the scale and diversity needed to train AI models effectively and responsibly.

This shift towards decentralization is one of the most exciting things to watch. AI will no longer be just a corporate endeavor; it will be a global, community-driven project. And that means the demand for microtask workers will soar as companies tap into the collective power of millions of people to help AI evolve.

Microtasks = Digital Oil, Human Annotators = The New Global Workforce

In the coming years, microtask jobs are becoming the new digital oil. They are the fuel that powers the rapid growth of AI. Trained human annotators are the new global workforce, a distributed, diverse team of individuals around the world helping shape AI into the sophisticated tool it is meant to be.

As AI becomes more integrated into every industry and aspect of life, the demand for microtasks will skyrocket. Whether it’s a startup launching its first chatbot, a large corporation rolling out a multilingual voice assistant, or a government entity ensuring AI stays ethical and responsible, the need for human oversight will never go away. Microtask workers will continue to be the unsung heroes behind the scenes, making sure AI models are human-like, ethical, and accurate.

So, buckle up. The demand for microtask AI jobs is about to explode in ways we’ve never seen before. 2026 is just the beginning.

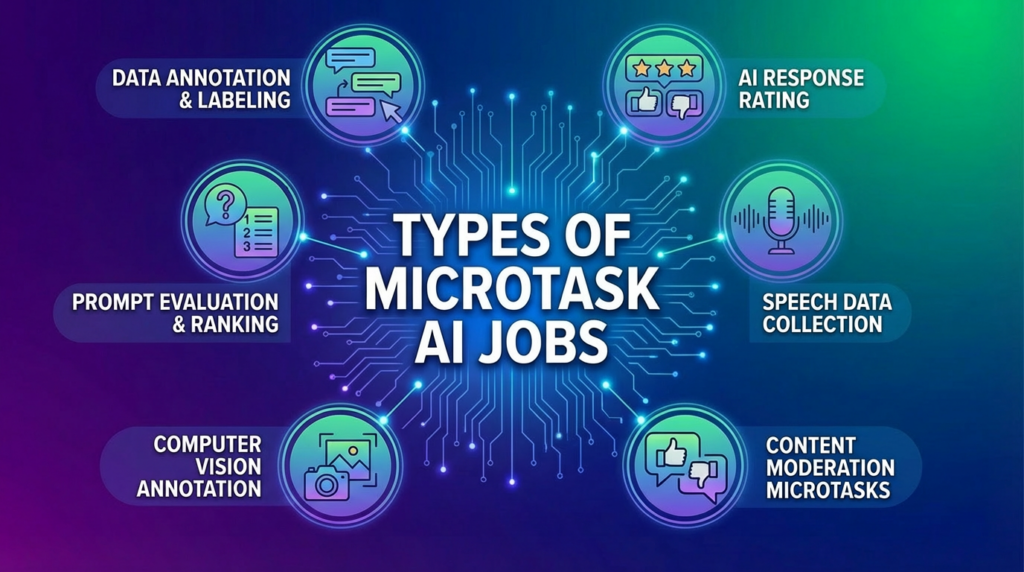

Types of Microtask AI Jobs (with Real-Life Scenarios)

Microtasks vary in complexity, and each type plays a unique role in improving AI systems. Below are the most common microtasks, along with real-world examples to help you understand their application.

a. Data Annotation & Labeling

What it is: Data annotation and labeling are foundational to AI development. These tasks involve marking or categorizing data, helping AI systems “learn” to recognize patterns.

Tasks Include:

- Drawing boxes around objects in images

- Categorizing products

- Tagging text (e.g., labeling positive or negative sentiments)

- Sorting images by type

Real Scenario:

A ride-sharing company is training self-driving cars to recognize bicycles at night.

Your task: You’re shown 50 images and must highlight bicycles using an on-screen tool to help the AI identify them. This small but crucial task helps AI systems recognize objects accurately, improving vehicle safety on the roads.

b. AI Response Rating (LLM Rater Jobs)

What it is: This task involves evaluating the quality and relevance of AI-generated responses. It’s one of the most in-demand microtask roles, especially with the rise of conversational AI and large language models (LLMs).

Tasks Include:

- Judging which answer is more accurate or relevant

- Evaluating politeness and tone

- Identifying bias or harm in responses

- Determining if the response follows instructions

Real Scenario:

You’re shown two chatbot replies to the query: “Explain climate change to a 10-year-old.”

Your task: Pick the reply that is clearer, safer, and more age-appropriate. This ensures AI is helpful and suitable for different audiences.

c. Prompt Evaluation & Ranking

What it is: Prompts are the instructions or questions given to an AI model. This task helps improve AI performance by refining how prompts are written and ranking the AI’s output.

Tasks Include:

- Rewriting or improving prompts to get better answers

- Ranking multiple outputs based on quality

- Rating translation accuracy

- Detecting logical errors or inconsistencies in AI responses

Real Scenario:

You are given three AI translations of the same French sentence.

Your task: Rank them from most natural to least natural. This ensures the AI’s translation model is both accurate and fluid, crucial for applications like multilingual chatbots or global communication tools.

d. Speech Data Collection

What it is: AI needs voice data to recognize different accents, tones, and speech patterns. This task helps train AI models to understand and produce human speech more effectively.

Tasks Include:

- Reading sentences aloud

- Recording voice commands

- Speaking in various tones or environments

Real Scenario:

You’re asked to record yourself saying:

“Call my brother” in three tones: normal, shouting, and whispering.

Your recordings help AI systems understand different vocal expressions, improving voice assistants like Siri or Alexa.

e. Computer Vision Annotation

What it is: Computer vision models need annotated images to recognize visual data, such as objects, faces, or abnormalities in pictures. This plays a critical role in industries like healthcare, autonomous driving, and security.

Tasks Include:

- Highlighting or marking objects within images

- Labeling visual data for machine learning models

- Identifying and tagging specific patterns

Real Scenario:

A healthcare AI project is designed to detect tumors in medical images.

Your task: Outline regions in X-ray or MRI scans where tumors might be present. This ensures that AI systems can accurately identify and flag potential health risks, assisting doctors in making timely diagnoses.

f. Content Moderation Microtasks

What it is: Content moderation ensures that AI-generated content adheres to safety guidelines. This task involves reviewing online material to ensure it’s free from harmful, offensive, or misleading content.

Tasks Include:

- Detecting hate speech or offensive language

- Identifying violence, explicit content, or harmful material

- Spotting misinformation or inappropriate content

Real Scenario:

You review a social media post for hate speech or misinformation.

Your task: Flag any content that violates platform guidelines, ensuring the AI behind the platform is safe and appropriate for all users.

Note: This type of microtask can be more challenging due to the nature of what’s being reviewed, but it is also well-paid because it’s essential for keeping AI models responsible and ethical.

These microtasks, while small individually, are critical to AI training and provide highly flexible opportunities for workers worldwide. From improving AI’s understanding of language and speech to helping AI visually interpret the world, these tasks allow companies to create more accurate, inclusive, and reliable AI models.

🏢 Companies Leading the AI Microtask Industry

If you are serious about getting into AI microtask work, it helps to know who is actually driving this industry. These companies are not side players. They are building the data pipelines that power real-world AI systems used by enterprises, governments, and global tech leaders.

Below is a clear, practical breakdown of the major players and the kind of work they offer.

Shaip

Shaip focuses on high-quality, enterprise-grade datasets, especially in industries where accuracy matters a lot. Think healthcare, retail intelligence, and conversational AI.

What Shaip is known for

- Medical and clinical datasets

- Speech and voice data across multiple languages

- Linguistic and sentiment-based annotation

- Large-scale, quality-controlled AI training projects

Common roles they hire for

- Speech data collectors

- Medical data labelers

- Sentiment analysts

- Multilingual transcription specialists

Real project example

A healthcare AI company wants to train a system to understand patient emotions.

Your task could be to label 5,000 doctor–patient conversation transcripts by topic and sentiment, such as anxiety, relief, confusion, or urgency.

This kind of work directly improves how AI assists doctors and healthcare providers.

Innodata

Innodata is one of the most respected names in the data annotation and AI training space. They work closely with enterprise AI teams, which means their projects are usually large, structured, and long-term.

What Innodata specializes in

- Enterprise AI training

- High-accuracy content annotation

- AI safety and compliance reviews

- Financial, legal, and technical datasets

Common roles they hire for

- Linguistic evaluators

- Data labelers

- AI safety raters

- Research-focused annotators

Real project example

A financial services company is building an AI assistant for investment research.

Your task could be to evaluate thousands of AI-generated answers and check them for factual accuracy, misleading claims, or missing context.

This type of work helps prevent AI from giving incorrect financial advice.

OpenAI Community Jobs

This is one of the most competitive and high-demand microtask ecosystems right now. OpenAI relies heavily on human feedback to improve model quality, safety, and usefulness across different regions and languages.

What makes OpenAI community work unique

- Global rater programs

- Strong focus on safety and ethics

- Language and culture-specific evaluation

- Direct impact on how large AI models behave

Common roles available

- AI response raters

- Safety and bias evaluators

- Prompt testers

- Model improvement contributors

Real project example

You are shown 1,000 pairs of GPT-style responses to the same question.

Your job is to rate which response is clearer, more helpful, and less biased.

These decisions directly influence how future AI models respond to millions of users.

Scale AI, Appen, and Toloka (Honorable Mentions)

These platforms have built massive global microtask ecosystems and often act as the backbone for large AI training operations.

What they typically cover

- Image and video labeling

- AI response evaluation

- Drone and satellite image annotation

- Quality assurance testing for AI systems

Why they matter

They run some of the largest annotation pipelines in the world and frequently support projects for self-driving cars, e-commerce platforms, and government AI systems.

If you are starting out, these platforms often provide entry-level opportunities that help you build experience quickly.

Why Knowing These Companies Matters

Understanding who leads the AI microtask industry helps you:

- Apply to the right platforms

- Prepare skills that actually match real projects

- Avoid low-quality or unreliable task providers

- Position yourself for better-paying, long-term work

These companies are not experimenting. They are scaling AI for the real world. And behind every successful AI model is a global network of microtask workers making it smarter, safer, and more human.

Skills You Need to Start AI Microtasks

Beginner skills:

- English or native-language fluency

- Attention to detail

- Ability to follow guidelines

- Consistency

- Basic computer literacy

Advanced skills (higher-paying roles):

- Linguistic knowledge

- Knowledge of annotation tools

- Understanding AI behavior

- Prior QA experience

How Much Can You Actually Earn?

Earnings vary by task, language, company, and accuracy.

Typical ranges:

- Simple annotation: $5–$12/hour

- AI response rating: $10–$25/hour

- Linguistic evaluation: $20–$45/hour

- Voice data recording: $0.30–$2 per clip

Realistic weekly earning example:

- 2 hours/day × response rating tasks × $15/hour

= $210 per week → $840/month

Higher-skilled annotators often cross $1,200–$2,500/month.

How Freelancers Can Use Truelancer to Get AI Trainer Roles

Companies across the world now use Truelancer to hire:

- AI trainers

- Annotators

- Linguistic evaluators

- Safety raters

- Prompt reviewers

- Content labelers

Why companies prefer Truelancer:

- Access to multilingual talent

- Faster project staffing

- Quality-checked freelancers

- Ability to scale large annotation teams

How freelancers can stand out on Truelancer:

- Add “AI Trainer / Annotator / Data Labeler” to profile

- Highlight languages

- Mention past tasks you’ve worked on

- Show accuracy or QC score if any

- Add small sample tasks (e.g., labeled screenshots)

The Future of AI Microtask Jobs (2026-2030 Predictions)

Here’s what’s coming:

1. More long-term AI trainer roles

Microtasks → long-term evaluator contracts.

2. Rise of multi-language evaluation

If you speak more than one language, your value doubles.

3. Specialized industry training

Medical, legal, automotive, finance, all need expert annotators.

4. Human-in-the-loop systems

AI will forever need human oversight.

5. Global regulation = more human raters

Governments will require human approval for:

- safety

- fairness

- misinformation checks

6. AI evaluation may become a formal career

Expect job titles like:

- AI Quality Analyst

- AI Behavior Evaluator

- Prompt Optimization Reviewer

- Model Safety Specialist

Conclusion: AI Microtask Jobs Are the New Age Digital Work

2026 is shaping up to be the biggest year ever for AI microtasks.

- No coding required

- Flexible hours

- Scalable income

- Growing global demand

- Perfect for students, freelancers, and remote workers

- Supported by platforms like Truelancer

If you’ve ever wanted to enter the AI industry without needing advanced technical skills, microtask AI jobs are your doorway.

And if you’re a freelancer ready to start earning from AI trainer work, annotation projects, or evaluation tasks…

Truelancer is now actively onboarding global talent for the upcoming 2026 AI projects.

Truelancer: AI Trainer Supplier

If you’re excited about the rise of AI microtask jobs and want to contribute to the very companies building the future of artificial intelligence, from data annotation to LLM evaluation to multilingual rating, now is the perfect time to step in.

Truelancer actively supplies trained AI annotators, evaluators, and microtask workers to leading global companies in the AI ecosystem.

Whether it’s large-scale projects in data labeling, prompt evaluation, speech datasets, or safety rating, we build dedicated teams of freelancers who support these organizations behind the scenes.

If you want to join these AI training projects and be part of the workforce powering the next generation of AI systems…

👉 Create your profile on Truelancer and apply for upcoming AI trainer, evaluator, and annotation roles.

From beginners to multilingual experts to domain specialists, Truelancer connects you with real, paid opportunities inside the world’s fastest-growing AI companies.

Start contributing to the future of AI.

Start earning as an AI trainer.

Start your journey today, only on Truelancer.